When people hear phrases like 'artificial intelligence (AI)' and ‘machine learning (ML)’, they think of them as being full of mysterious code and ‘black-box’ technology. The good news? Behind the scenes, it is much more human than it seems. At its heart, modern AI is mathematics in action. It is applied mathematics paired with practical computer science. It makes machines capable of seeing, reasoning, and deciding.

When we say applied mathematics and artificial intelligence, we mean the structured, real-world mathematics (vectors, probabilities, optimization rules) that powers AI. This basic understanding is not just for mathematicians. It is for anyone who wishes to learn how AI systems work and build a career in this revolutionary technology.

To do so, you will have to start answering the basic questions, such as the following:

- Why applied mathematics matters in AI (Why math?)

- The core mathematical pillars of AI systems (What math?)

- How are more advanced branches of math shaping next-generation AI? (What’s next?)

- The real-world effect: more efficient, fairer, explainable AI (Why this matters?)

1. The Mathematical Foundations Behind AI

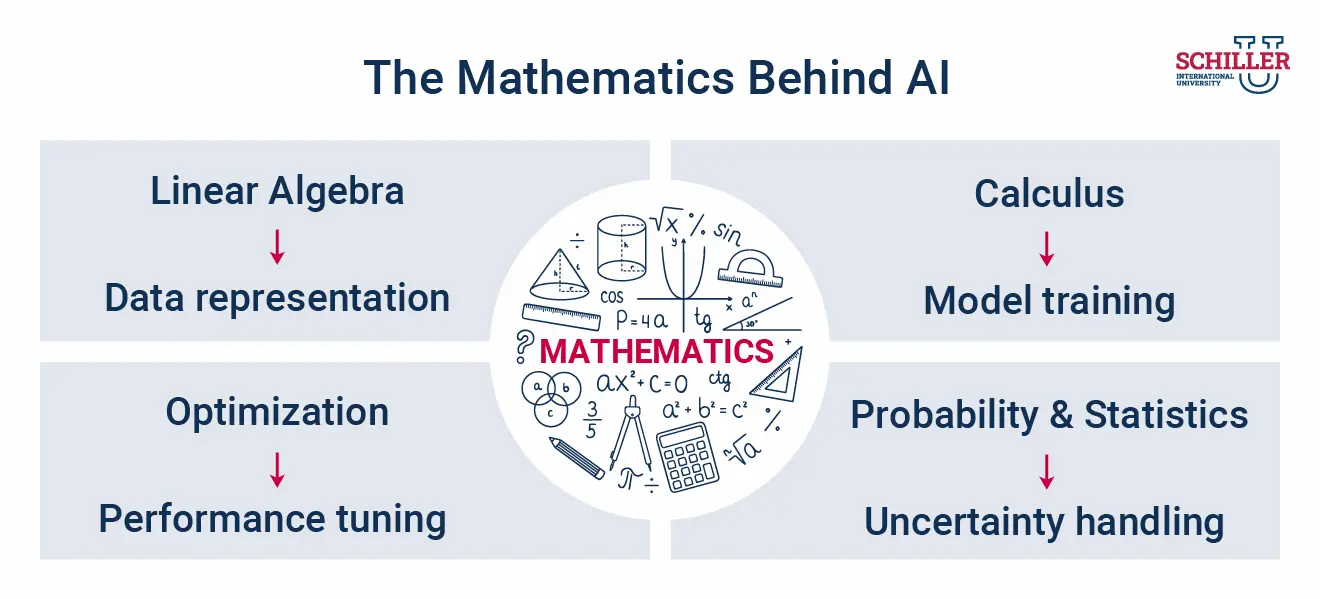

AI may look like pure technology, but every smart system, from your phone’s voice assistant to predictive healthcare models, is quietly powered by mathematics. Four main areas of applied math work together to make AI learn, reason, and make decisions: linear algebra, calculus, probability and statistics, and optimization.

Linear Algebra: The Language of Data

Linear algebra helps computers understand and organize the world around us. It is how images, sounds, or even words become numbers that machines can work with.

- Turning things into data: Every photo, voice recording, or sentence is translated into numbers, matrices, and vectors that represent patterns and relationships.

- Building neural networks: Deep learning models rely on fast matrix multiplication to connect and adjust ‘neurons’ across layers. It helps AI systems recognize faces, translate languages, or detect spam.

- Simplifying information: Techniques such as Principal Component Analysis (PCA) reduce large datasets into their most meaningful parts, helping AI find structure in chaos.

In simple terms, linear algebra helps AI see and represent the world in numbers.

Calculus: How AI Learns and Improves

Calculus deals with change, and that is exactly what learning is. AI uses it to understand how to get better with each attempt.

- Learning from mistakes: The ‘gradient descent’ method uses derivatives to see how much to adjust a model’s settings after each error, improving predictions step by step.

- Backpropagation: This process uses the chain rules from calculus to pass feedback through all the layers of a network, helping it fine-tune itself.

- Understanding change: In robotics or weather prediction, calculus helps AI model how things move and shift over time.

Essentially, calculus helps AI learn from every outcome, a mathematical way of saying, ‘try again, but smarter.’

Probability and Statistics: Making Sense of Uncertainty

No real-world data is perfect. AI faces uncertainty every day. It could be missed details, human error, or unexpected patterns. That is where probability and statistics step in.

- Dealing with unknowns: Probability helps AI assign confidence levels (“I’m 80% sure this email is spam”) instead of making blind guesses.

- Finding connections: Bayesian networks and other statistical tools help AI see how one factor affects another, such as how certain symptoms relate to a diagnosis.

- Checking reliability: Statistical tests ensure models are not just memorizing data but can also perform well in real situations.

Probability and statistics help AI make smarter, more confident decisions in an unpredictable world.

Optimization: Finding the Best Possible Answer

Every AI system is, at its core, trying to find the ‘best fit.’ Optimization theory gives it the tools to do that.

- Finding what works: Algorithms like gradient descent adjust thousands of parameters until performance improves.

- Handling complexity: Modern AI deals with messy, ‘non-convex’ problems, landscapes with many peaks and valleys, so advanced optimization methods help find good enough solutions quickly.

- Creative problem-solving: Sometimes AI uses bio-inspired methods like genetic algorithms or swarm intelligence to explore possible answers when math alone is not enough.

Optimization is how AI moves from ‘good enough’ to ‘remarkably effective.’ Together, these four areas of applied mathematics give AI its foundation. They help machines learn, adapt, and reason with the world around them.

2. Advanced Mathematical Concepts Powering Next-Gen AI

When we step beyond the basics, those familiar pillars like linear algebra, calculus, and statistics, we enter an exciting frontier where more abstract areas of applied mathematics are shaping the future of AI systems.

Differential and Information Geometry

Imagine data as a landscape. Traditional geometry treats flat surfaces (Euclidean space), but much of the data, and the spaces that effective AI models live in, are curved, warped, or ‘manifold-like.’ That is where geometry comes in.

- Manifold learning is the idea that even when data appear high-dimensional (lots of features), they might lie on a much lower-dimensional 'surface' embedded in that space. In other words, while your image data might have thousands of numbers, the underlying meaningful patterns may curve and fold in ways geometry helps us understand.

- Geometric deep learning applies this to ideas like graphs, point clouds, and non-flat data: rather than treating every dataset as if it sits on a flat grid, we allow AI to work with nodes, edges, and surfaces.

- Information geometry views the space of probability distributions as a geometric surface. Doing so gives us smarter ways to optimize models or understand how confident the model is, for example, via 'natural gradient' methods rather than plain gradient descent.

Geometry gives AI the language to recognize shape in data and how things bend, twist, or relate so that models can better mirror the real-world structure of what they’re analyzing.

Category Theory

If geometry helps us see the shape of data, category theory helps us see the structure of structures. It might sound ultra-abstract, but its end goal is practical: to build AI systems that are more modular, explainable, and broadly applicable.

- At its core, category theory deals with objects and the relationships (morphisms) between them, and how to compose those relationships in consistent ways.

- Researchers are now using it to unify different AI architectures (for example, connecting convolutional networks, graph neural nets, and transformer-style models under a common mathematical language). For example, the study “Position: Categorical Deep Learning is an Algebraic Theory of All Architectures” shows that category theory applies to many neural-network types.

- It also plays a role in explainable AI. By giving a formal mathematical frame for 'what is an explanation' or 'how do modules compose’, category theory supports making AI’s reasoning paths clearer.

Category theory is like the ‘grammar and syntax’ of AI systems. It is how different components speak to each other, combine, and grow into more capable structures.

3. Why This Matters for Next-Generation AI

These advanced mathematical tools are not just academic curiosities. They are enabling AI systems that are smarter, more efficient, and more aligned with real-world complexity.

- Handling complex data forms: Think social networks, molecule graphs, 3D point clouds, or non-grid sensor data. Geometry and graph-based methods allow AI to learn from them beyond the 'flat image' paradigm.

- Improving modularity and transferability: With category theory, we can design AI architectures that reuse and recombine components in new ways, faster development, and fewer reinventions.

- Better reasoning under uncertainty and structure: Information geometry supports optimization and probabilistic reasoning in spaces not simple lines or curves, helping AI behave more reliably.

- Richer explainability and fairness: By understanding the shapes and flows of model reasoning, we stand a better chance of detecting bias, making decisions transparently, and ensuring AI works for all backgrounds.

4. Applied mathematics for smarter, fairer AI

Applied mathematics is used not just to make AI powerful, but to make it efficient, fair, and ready for real-world use.

Making AI More Efficient

Every AI model needs computing power, speed, memory, and energy. Math helps reduce these costs so AI can run not only in labs but also on your phone or smart device.

- Model compression: Techniques like quantization (using fewer bits to store data) and low-rank matrix approximations (simplifying large data tables) come from linear algebra and optimization. They make models smaller and faster (SpringerLink, 2024).

- Edge-friendly AI: These mathematical shortcuts allow AI to run on small devices, not just giant servers.

- Balancing trade-offs: Optimization math helps answer questions like, 'If we make the model smaller, how much accuracy do we lose?' and 'What’s the fastest way to get a good-enough answer?'

Math helps AI work smarter, not harder.

Making AI Fair and Transparent

Math also helps ensure AI decisions are fair, explainable, and trustworthy.

- Checking bias: Statistical tools measure whether AI treats different groups, such as age or gender, equally. This is based on probability and statistics (TUM Institute for Ethics in AI, 2024).

- Building fairness into AI models: Math allows fairness rules to be added directly to algorithms, so the model adjusts if its predictions become biased.

- Finding balance: Improving fairness can affect accuracy. Optimization methods help find healthy middle ground. Massachusetts Institute of Technology (MIT) researchers, for example, designed systems that can 'pause’ a decision if the data seems unfair.

- Auditing compressed models: When models are made smaller for efficiency, math helps to check if fairness has been lost, and how to fix it.

Math helps AI make not just faster decisions but fair ones.

Why This Matters for You

If you want to study applied mathematics with a focus on AI, it is helpful to know this:

- Your mathematical training enables smart AI and helps build AI that works well and responsibly.

- Understanding the math behind efficiency means building AI that can scale and be practical.

- Understanding the math behind fairness means building AI for everyone and making sure it treats people fairly.

AI may look like a field of code, but it runs on human logic expressed through mathematics. From recognizing images to making fairer decisions, AI depends on applied math to stay efficient, transparent, and grounded in reason. For you, this connection matters deeply. Studying applied mathematics is not about solving abstract equations; it is about understanding how those equations shape real technology.

Explore Schiller International University's BSc in Applied Mathematics and Artificial Intelligence program to design smarter models, improve fairness, or simply explore how machines learn.

FAQs

Q1. Why is applied mathematics important for AI?

Answer: Applied mathematics gives AI its foundations. It helps systems represent data, learn from mistakes, make predictions, and find the best solutions efficiently. Without math, AI cannot reason or improve.

Q2. What areas of applied mathematics are most important to artificial intelligence?

Answer: The four core areas are:

- Linear algebra (data representation)

- Calculus (learning and change)

- Probability and statistics (handling uncertainty)

- Optimization (finding the best outcomes)

Q3. How is math used to improve machine learning models and accuracy?

Answer: Math powers training and fine-tuning. Calculus drives backpropagation, linear algebra shapes data into patterns, and optimization algorithms reduce errors until performance improves.

Q4. Can someone succeed in an AI career without advanced mathematical skills?

Answer: You can start learning AI with basic math, but progressing to advanced roles requires a deeper understanding. Even non-mathematicians benefit from knowing how mathematical ideas guide AI decisions. It helps you design, debug, and improve systems confidently.

Request information

Request information